Harrison Vanderbyl Email

Chief Technology Officer . Featherless AI

San Francisco, CA

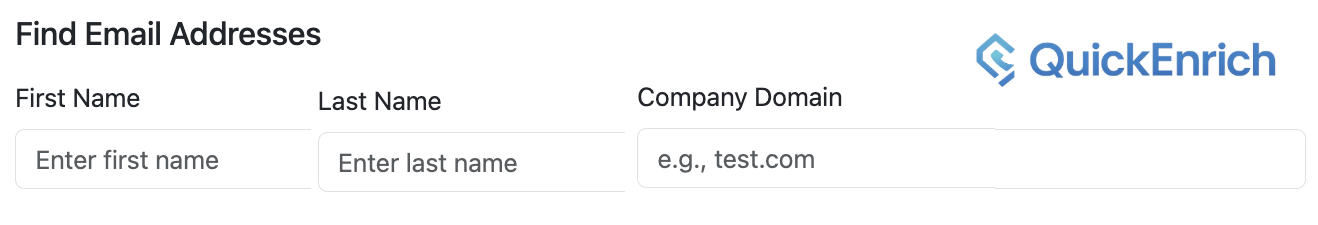

LocationPrimary Email

How to contact Harrison Vanderbyl

Join and see Harrison's contact info for free!Current Roles

Employees:

14Revenue:

$2MAbout

The future of compute is AI inference, and the future of AI inference is featherless.ai.\n\nWe enable Serverless AI with our GPU orchestration system. See it in action on our public cloud, which offers serverless inference for 2,400+ open weight models from Hugging Face.\n\nUnlock your model catalog with a GPU fleet sized for inference throughput not model count.Featherless AI Address

San Francisco, CA

United States